The hardest part about web scraping can be getting to the data you want to scrape.

For example, you might want to scrape data from a search results page for a number of keywords.

This tutorial explains how you can easily scrape Google Search results and save the listings in a Google Spreadsheet. It can be useful for monitoring the organic search rankings of your website in Google for particular search keywords vis-a-vis other competing websites. Web scraping with Python is easy due to the many useful libraries available. A barebones installation isn’t enough for web scraping. One of the Python advantages is a large selection of libraries for web scraping. For this Python web scraping tutorial, we’ll be using three important libraries – BeautifulSoup v4, Pandas, and Selenium. Next, capture the term entered. Click the search box and select “Extract value of this item”. The search item you just captured will be added to the extracted result. Click search button choose “Click an item”. The information I want is on the detail page. So I need to create a list of item to get into that page.

You mighty setup separate scraping projects for each keyword.

However, there are powerful web scrapers that can automate the searching process and scrape the data you want.

Today, we will set up a web scraper to search through a list of keywords and scrape data for each one.

A Free and Powerful Web Scraper

For this project, we will use ParseHub. A free and powerful web scraper that can scrape data from any website. Make sure to download and install ParseHub for free before we get started.

We will also scrape data from Amazon’s search result page for a short list of keywords.

Searching and Scraping Data from a List of Keywords

Now it’s time to setup our project and start scraping data.

- Install and Open ParseHub. Click on “New Project” and enter the URL of the website you will be scraping from. In this case, we will scrape data from Amazon.ca. The page will then render inside the app and allow you to start extracting data.

- Now, we need to give ParseHub our list of keywords we will be searching through to extract data. To do this, click on the settings icon at the top left and click on “settings”.

- Under the “Starting Value” section you can enter your list of keywords either as a CSV file or in JSON format right in the text box below it.

- If you’re using a CSV file to upload your keywords, make sure you have a header cell. In this case, it will be the word “keywords”.

- Once you’ve submitted your list of keywords, click on “Back to Commands” to go back to your project.Click on the PLUS (+) sign next to your “page” selection, click on Advanced and click on the “Loop” command.

- By default, your list of keywords will be selected as the list of items to loop through. If not, make sure to select “keywords” from the dropdown.

- Click on the PLUS (+) sign next to your “For each item in keywords” selection and choose the “Begin New Entry” command. This command will be named “list1” by default.

- Click on the PLUS (+) sign next to your “list1” tool and choose the “Select” command.

- With the select command, click directly on the Amazon search bar to select it.

- This will create an input command, under it, choose “expression” from the dropdown and enter the word “item” on the text box.

- Now we will make it so ParseHub adds the keyword for each result next to it. To do this, click on the PLUS (+) sign next to the “list1” command and choose the “extract” command.

- Under the extract command, enter the word “item” into the first text box.

- Now, let’s tell ParseHub to perform the search for the keywords in the list. Click on the PLUS (+) sign next to your “list1” selection and choose the select command.

- Click on the Search Button to select it and rename it to “search_bar”

- Click on the PLUS (+) sign next to your “search_bar” selection and choose the “Click” command

- A pop-up will appear asking you if this is a “next page” button. Click on “No” and rename your new template to “search_results”

- Now, let’s navigate to the search results page of the first keyword on the list and extract some data.

- Start by switching over to browse mode on the top left and search for the first keyword on the list.

- Once the page renders, make sure you are still working on your new “search_results” template by selecting it with the tabs on the left.

- Now, turn off Browse Mode and click on the name of the first result on the page to select it. It will be highlighted in green to indicate that it has been selected.

- The rest of the products on the page will be highlighted in yellow. Click on the second one on the list to select them all.

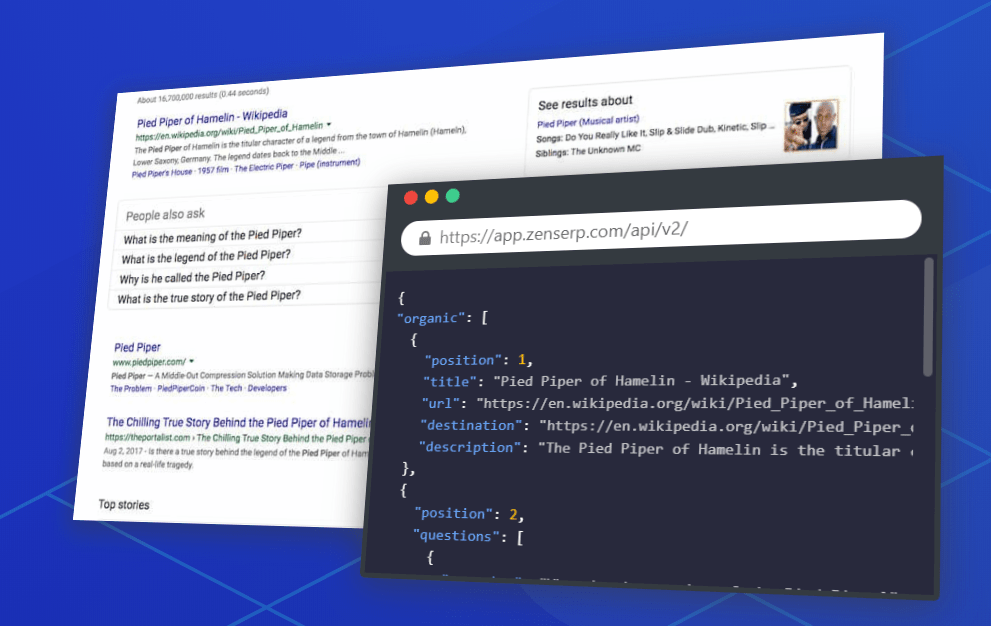

Web Scraping Google Search Results

- ParseHub is now extracting the product name and URL for each product on the first page of results for each keyword.

Do you want to extract more data from each product? Check out our guide on how to scrape product data from Amazon including prices, ASIN codes and more.

Do you want to extract more pages worth of data? Check out our guide on how to add pagination to your project and extract data from more than one page of results.

Running your Scrape

It is now time to run your project and export it as an Excel file.

To do this, click on the green “Get Data” button on left sidebar.

Here you will be able to test, run or schedule your scrape job. In this case, we will run it right away.

Closing Thoughts

ParseHub is now off to extract all the data you’ve selected. Once your scrape is complete, you will be able to download it as a CSV or JSON file.

You now know how to search through a list of keywords and extract data from each result.

What website will you scrape first?

lxml and Requests¶

lxml is a pretty extensive library written for parsingXML and HTML documents very quickly, even handling messed up tags in theprocess. We will also be using theRequests module instead of thealready built-in urllib2 module due to improvements in speed and readability.You can easily install both using pipinstalllxml andpipinstallrequests.

Let’s start with the imports:

Web Scraping Search Results Free

Next we will use requests.get to retrieve the web page with our data,parse it using the html module, and save the results in tree:

(We need to use page.content rather than page.text becausehtml.fromstring implicitly expects bytes as input.)

tree now contains the whole HTML file in a nice tree structure whichwe can go over two different ways: XPath and CSSSelect. In this example, wewill focus on the former.

XPath is a way of locating information in structured documents such asHTML or XML documents. A good introduction to XPath is onW3Schools .

There are also various tools for obtaining the XPath of elements such asFireBug for Firefox or the Chrome Inspector. If you’re using Chrome, youcan right click an element, choose ‘Inspect element’, highlight the code,right click again, and choose ‘Copy XPath’.

After a quick analysis, we see that in our page the data is contained intwo elements – one is a div with title ‘buyer-name’ and the other is aspan with class ‘item-price’:

Knowing this we can create the correct XPath query and use the lxmlxpath function like this:

Let’s see what we got exactly:

Congratulations! We have successfully scraped all the data we wanted froma web page using lxml and Requests. We have it stored in memory as twolists. Now we can do all sorts of cool stuff with it: we can analyze itusing Python or we can save it to a file and share it with the world.

Some more cool ideas to think about are modifying this script to iteratethrough the rest of the pages of this example dataset, or rewriting thisapplication to use threads for improved speed.